Introduction

The purpose of this lab was to become familiar with various image functions when using ERDAS Imagine. By manipulating and otherwise viewing the different function workflows, understanding the concepts of these image functions was much easier than say reading about them. Whatever the project, whatever the dataset, image functions can help to correct poor imagery for the job.

Methods

Part 1: Image Subsetting

Image subsetting refers to the creation of an area of interest when studying imagery. An

inquire box refers to one method of subsetting, where a rectangular box is placed in the imagery. The second type of subsetting is creating an

Area of Interest (AOI) in which the user creates a more specific shape as their subset by using a shapefile, or digitizing the area for example.

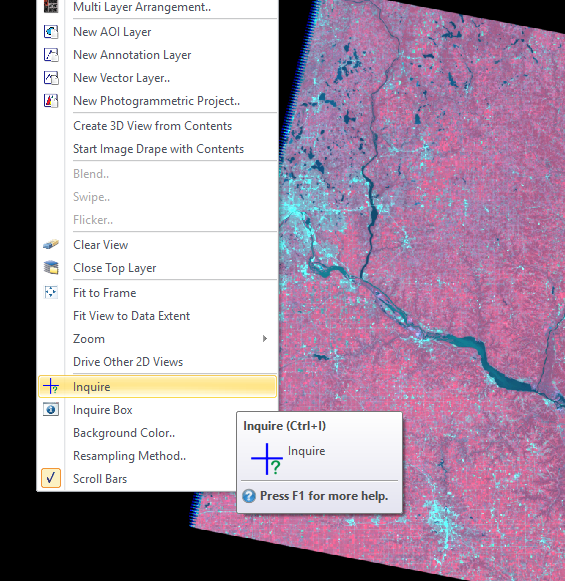

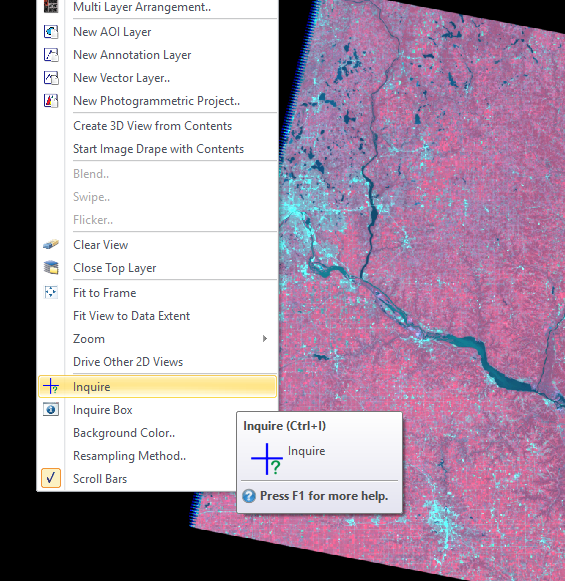

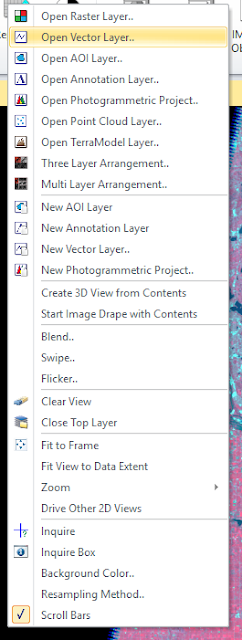

Section 1 - Inquire Box

An inquire box was used first. To do this, ERDAS Imagine was opened and a raster layer was added to the viewer. Then,

Inquire Box was selected from the right-click pop up menu (figure 1).

|

| Figure 1: Inquire Box |

Then, an inquire box was drawn around the Chippewa valley by clicking in the top left corner of the AOI and dragging until the area was sufficiently covered. From there, the

raster tab on the software banner was clicked and

Subset & Chip > Create Subset Image was chosen- a pop-up window appeared. A output folder and name for output file were created and the

From Inquire Box was selected- changing the coordinates of the

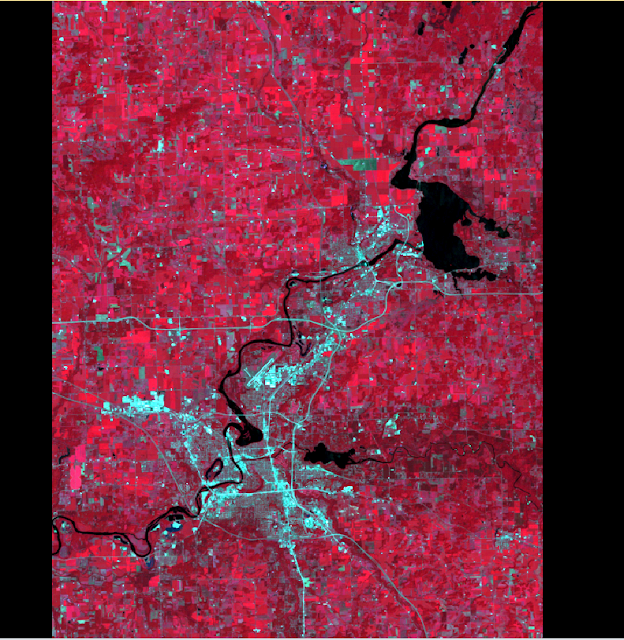

subset definition. The tool was run and created a defined inquire box subset of the original image (figure 2).

|

| Figure 2: Chippewa Valley Inquire Box Subset. |

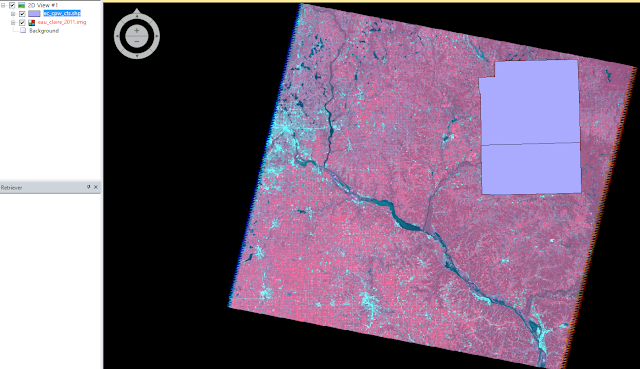

Section 2 - Area of Interest

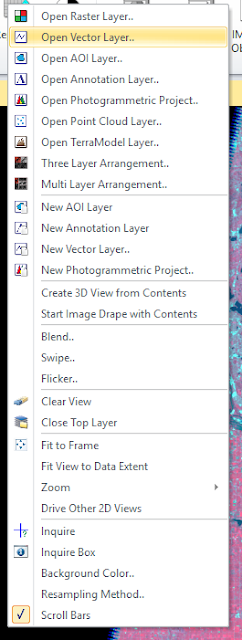

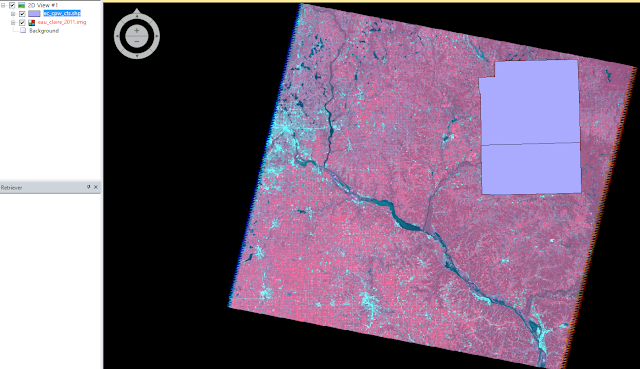

This method started the same way as the last; by bringing in a raster file in a new viewer. Then, a shapefile was added. This was done by right-clicking in the viewer and choosing Open Vector Layer (figure 3). An add layer window was opened and shapefile was selected from the Files of Type selector. A shapefile (.shp) containing Eau Claire and Chippewa counties was used as an overlay (figure 4).

|

| Figure 3: Add vector layer. |

|

| Figure 4: Shapefile overlay. |

Next, the two counties were selected by holding down the

Shift key and clicking on each shape. Then, the banner tab

Home > paste from selected object were clicked. Next,

File > Save As > AOI Layer As was selected to save the shape as an Area of Interest file. Once this was done, the same procedure as section 1 of part 1 was used, only this time the saved AOI file was used as the subset instead of the Inquire Box. A subset of Eau Claire and Chippewa counties was created (figure 5).

|

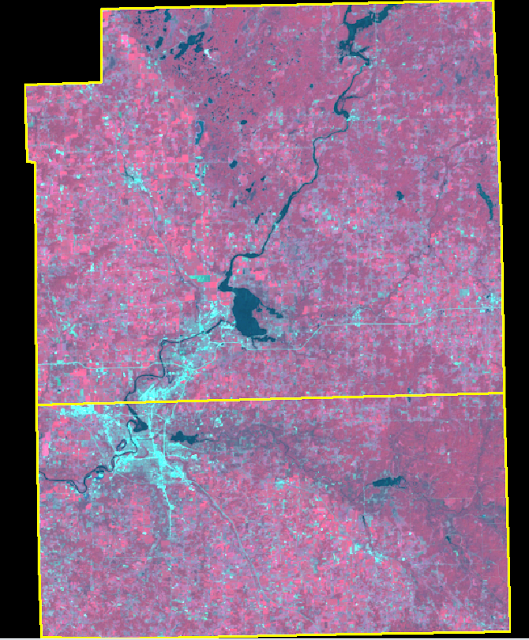

| Figure 5: Eau Claire and Chippewa counties subset. |

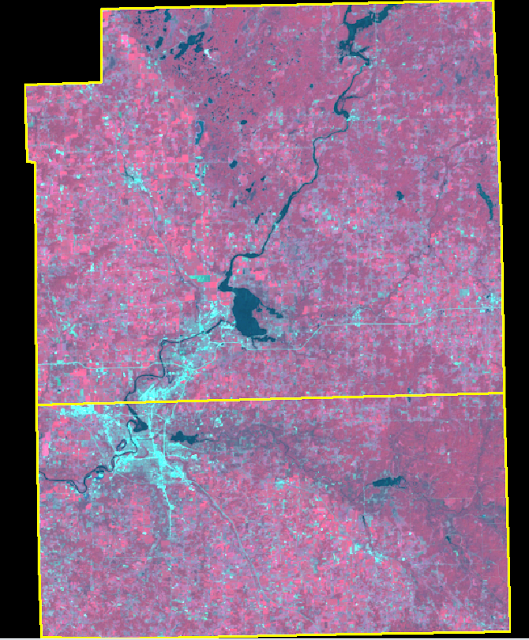

Part 2: Image Fusion

Image fusion refers to the manipulation of an image's spatial resolution. This can be done using Pan Sharpening tools in ERDAS, which takes the spatial resolution of an image and merges it with another- this is called a Resolution Merge.

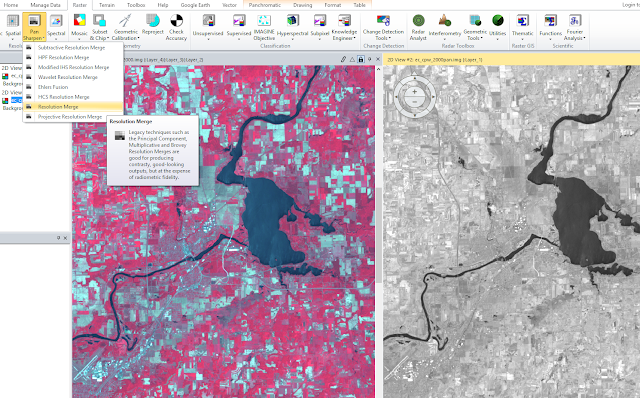

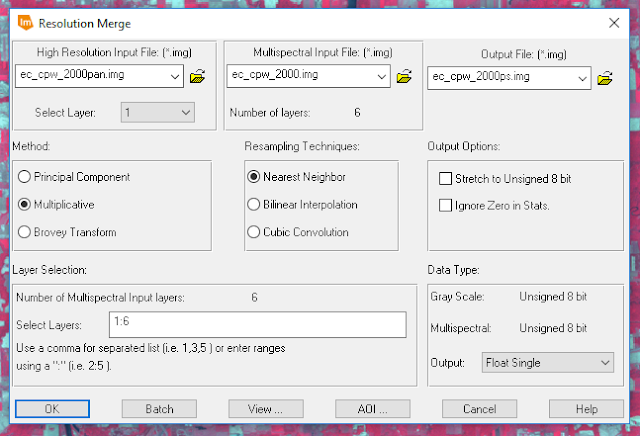

To complete the resolution merge, a 15-meter panchromatic image and a 30-meter reflective image were brought in ERDAS as two separate viewers. Then, the banner tab

Raster > Pan Sharpen > Resolution Merge was clicked (figure 6).

|

| Figure 6: Begin resolution merge. |

|

| Figure 7: Resolution merge window. |

The parameters for the resolution merge are shown in figure 7. The panchromatic image was the set to the

High Resolution Input File and the reflective image was set to the

Multispectral Input File. The output file was given a name and location,

Multiplicative method and

Nearest Neighbor Resampling Technique were chosen. The pan-sharpened image was created and used to compare with the reflective image.

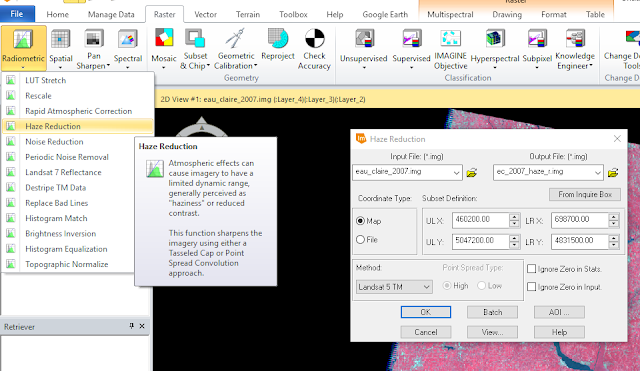

Part 3: Simple Radiometric Enhancement

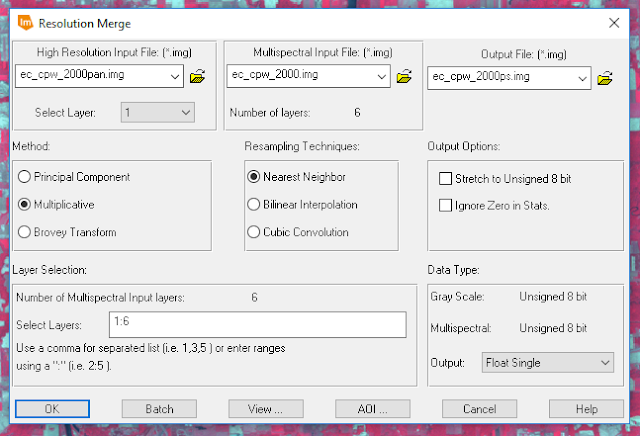

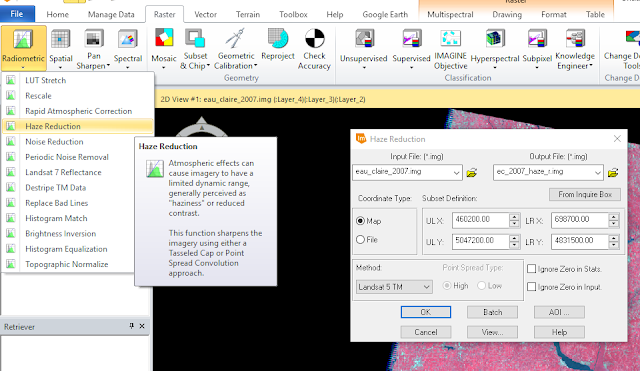

The

Radiometric Resolution of an image refers to the amount of value variation visible in the image based on bit-size. Sometimes, due to atmospheric haze, an image's value variance is diminished. To correct this, the radiometric tool

Haze Reduction was used.

By opening a reflective image in an ERDAS viewer and selecting

Radiometric > Haze Reduction under the Raster tab, a haze reduction window opened (figure 8).

|

| Figure 8: Haze Reduction window. |

|

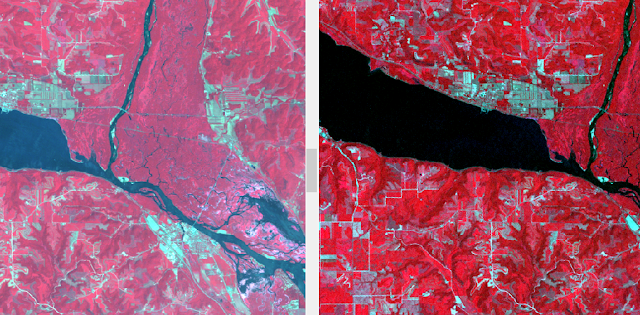

| Figure 9: Haze Reduction Result. |

A name for the output image was chosen and the result is shown on the right side of figure 9. It is clear to see the effect of color contrast and variance between the two images.

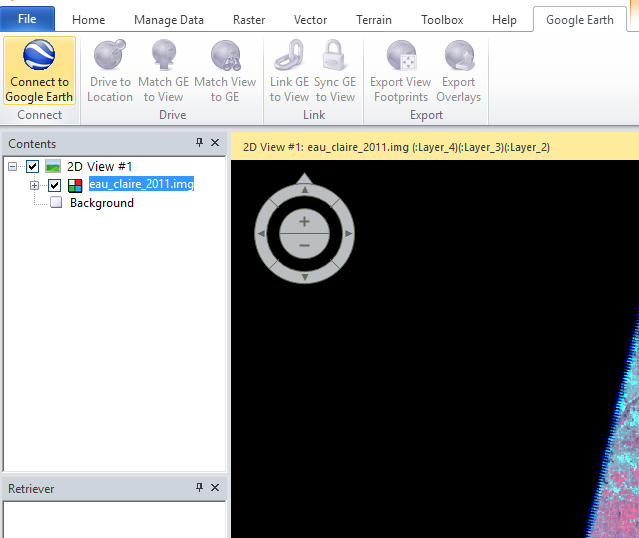

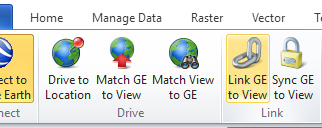

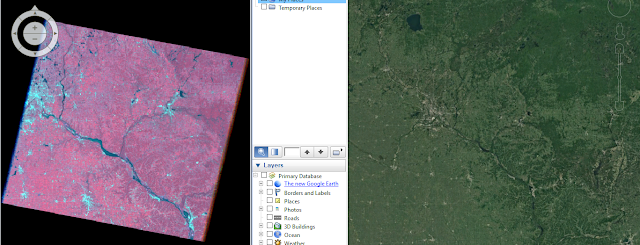

Part 4: Linking ERDAS Viewer to Google Earth

When interpreting aerial imagery, it can be difficult to determine what certain shapes and objects are. It's helpful to reference other viewpoints to make those determinations; whether it be going to the place, or the second best option is utilizing a 3-D viewer like Google Earth to obtain annotation information as well as oblique and ground-level views of places.

|

| Figure 10: Begin connecting to Google Earth. |

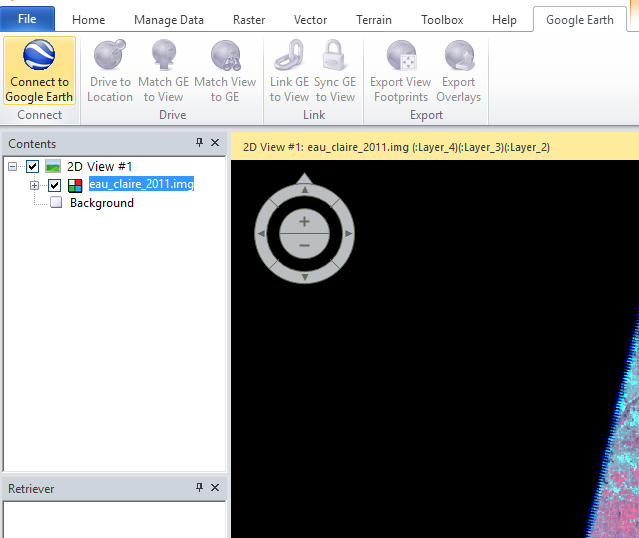

As shown in figure 10, the

Google Earth tab was navigated to and the highlighted

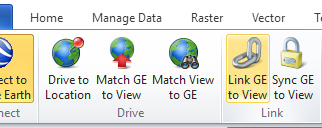

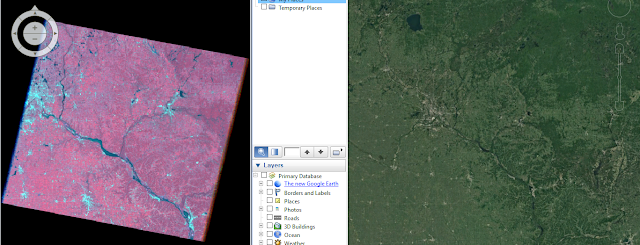

Connect to Google Earth button was clicked. This action opened Google Earth in another viewer, splitting the software as shown in figure 12. The

Link GE to View button was then clicked to synchronize the two viewers (figure 11).

|

| Figure 11: Linking ERDAS Viewer with Google Earth. |

|

| Figure 12: Synchronized split view (ERDAS Viewer left, Google Earth right). |

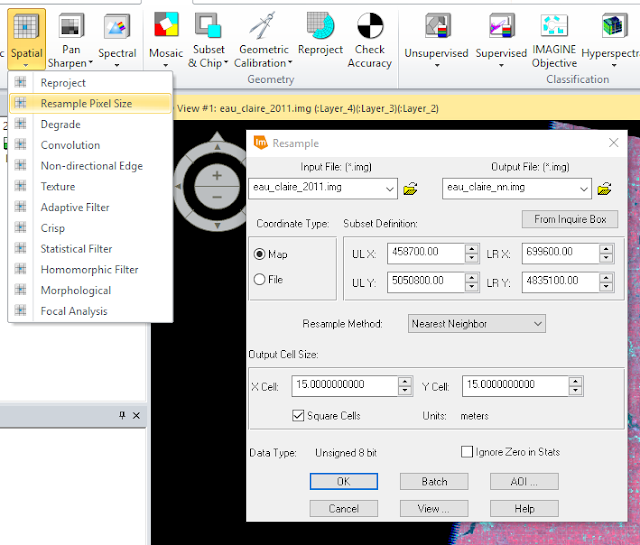

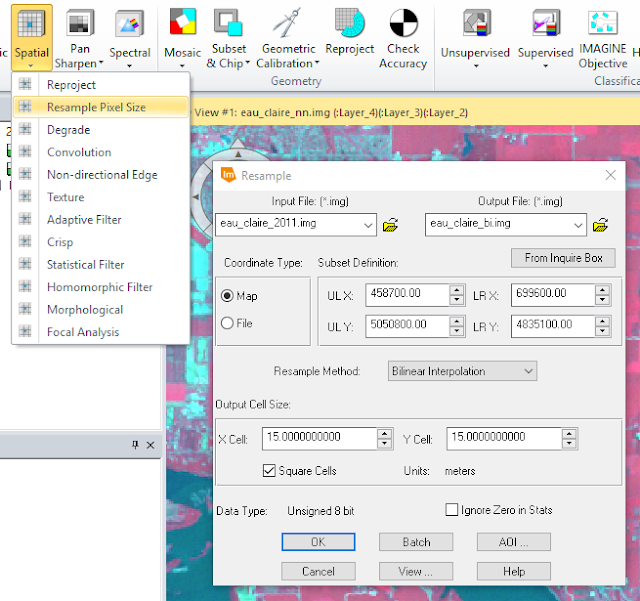

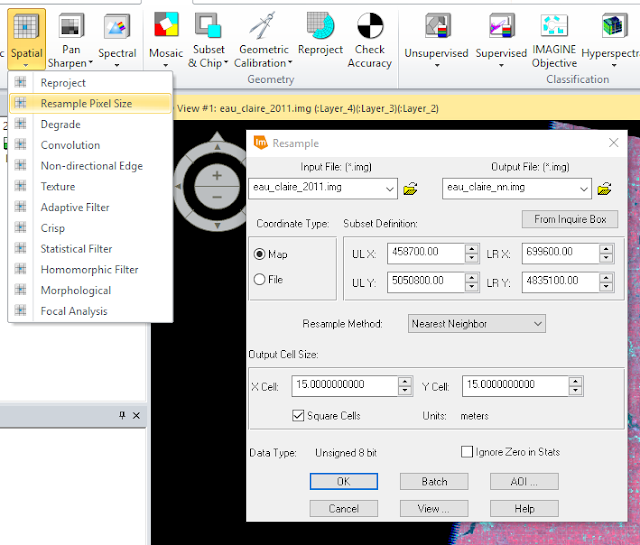

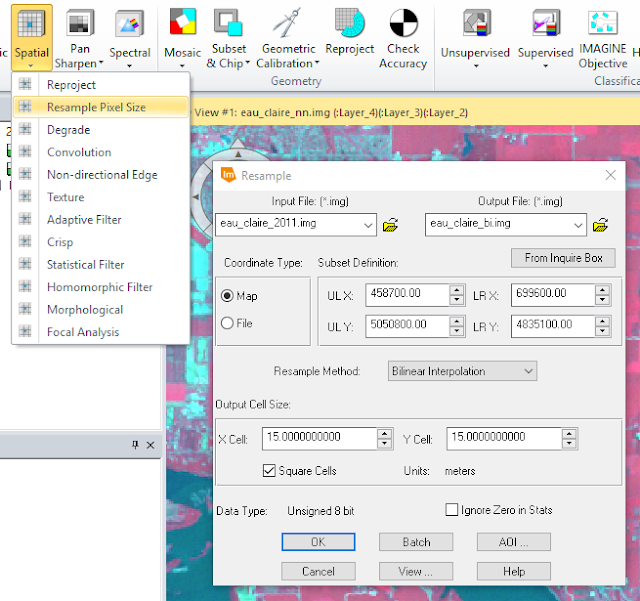

Part 5: Resampling Imagery

Resampling imagery refers to rendering the pixel size of an image differently than it was originally produced to enhance the spatial resolution of an image. In this part of the lab, two different resampling techniques were used on the same original image:

Nearest Neighbor and

Bilinear Interpolation.

First, a 30 meter reflective image was brought into the ERDAS viewer and the

Raster banner tab was clicked to open the raster tools. Next,

Spatial > Resample Pixel Size tool was used (figure 13).

|

| Figure 13: Using Nearest Neighbor Resampling. |

|

| Figure 14: Using Bilinear Interpolation Resampling. |

In figures 13 and 14, the

Output Cell Sizes were set to 15 Meters and

Square Cells was checked. The two outputs varied slightly as each employed a different resampling method.

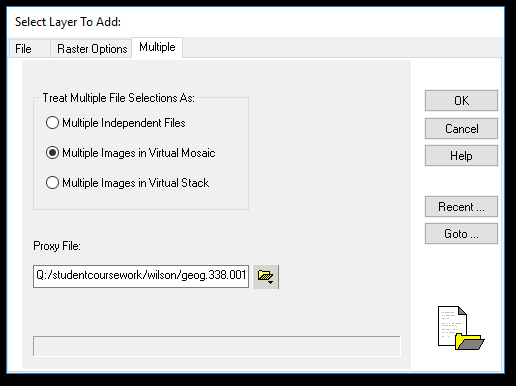

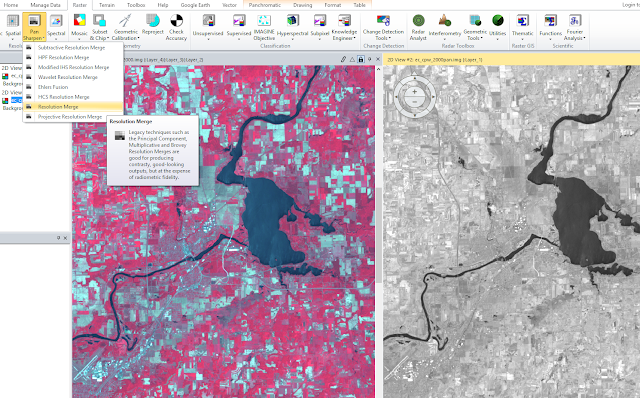

Part 6: Image Mosaicking

Mosaicking refers to merging two or more overlapping images for analysis/interpretation. The goal of mosaicking is to create a seamless transition along the border of the two images. Two different mosaicking methods were used in this part of the lab: Mosaic Express and MosaicPro, both of which are ERDAS tools.

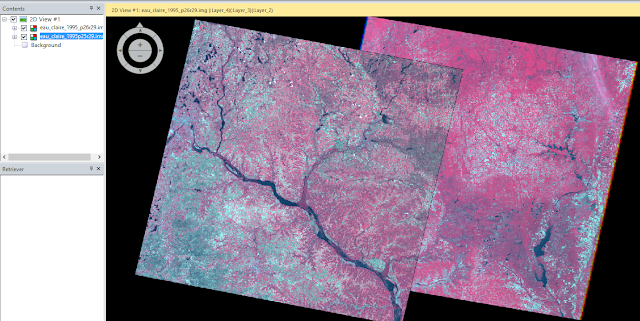

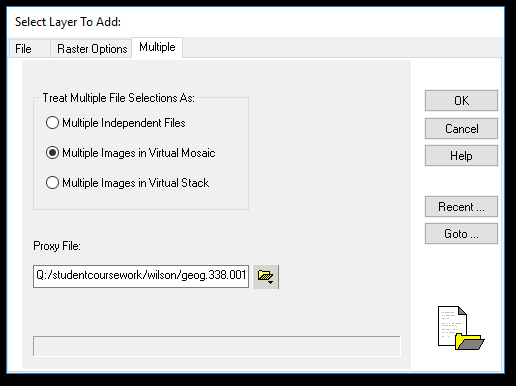

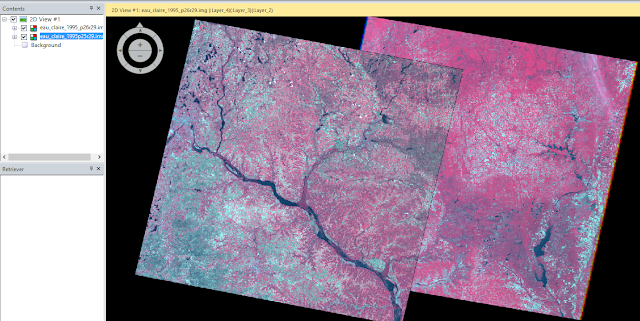

To begin, two raster layers were added to the viewer. This was done by adding a raster layer like normal, except this time, before clicking OK and adding a single image to the viewer, the Multiple tab in the Select Layer to Add window is clicked along with the Multiple Images in Virtual Mosaic option (see figure 15). The second image was added and the viewer looked like figure 16.

|

| Figure 15: Add multiple images window. |

|

| Figure 16: Add multiple images viewer. |

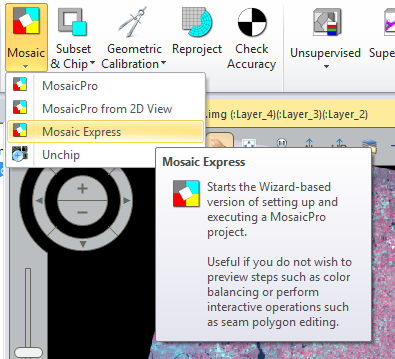

Once the viewer was set up, it was time to use the first mosaic tool- Mosaic Express.

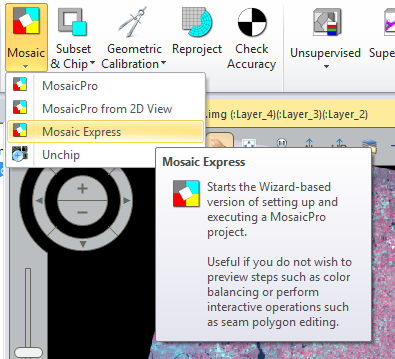

Section 1 - Mosaic Express

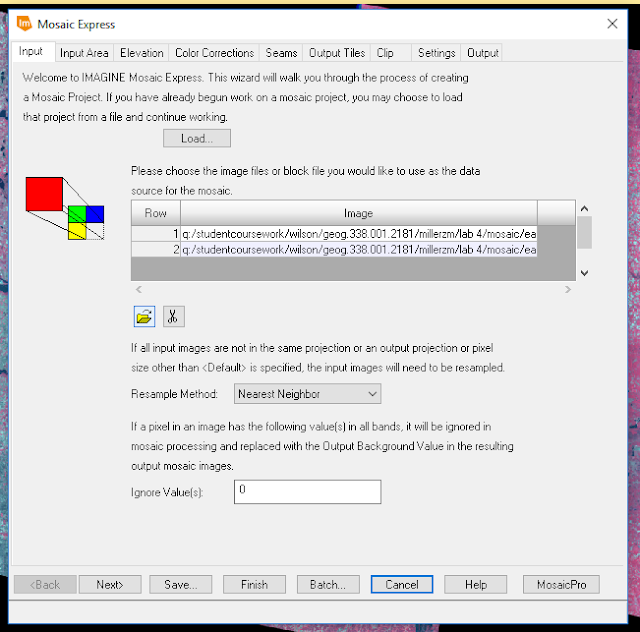

The Mosaic > Mosaic Express button under the Raster banner tab was clicked and a pop-up window opened (see figures 17 and 18).

|

| Figure 17: Open Mosaic Express tool. |

|

| Figure 18: Mosaic Express window. |

The highlighted folder icon near the left center of figure 18 was used to select the two images (the same as the ones in figure 16). For this resampling method,

Nearest Neighbor was used.

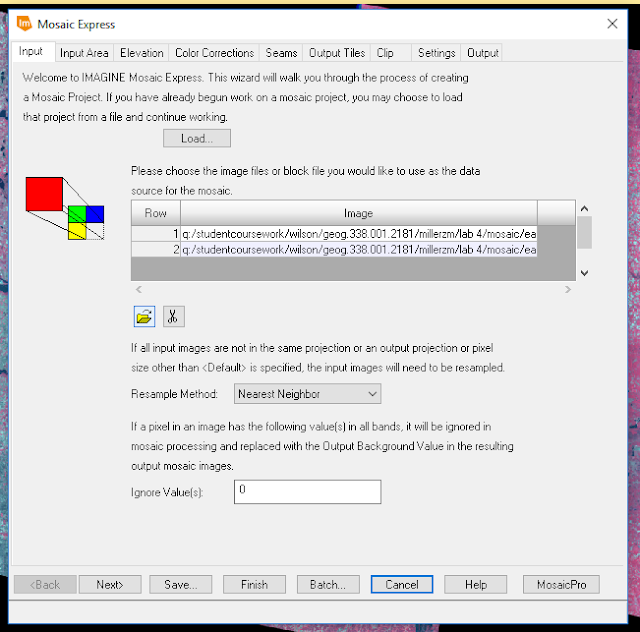

|

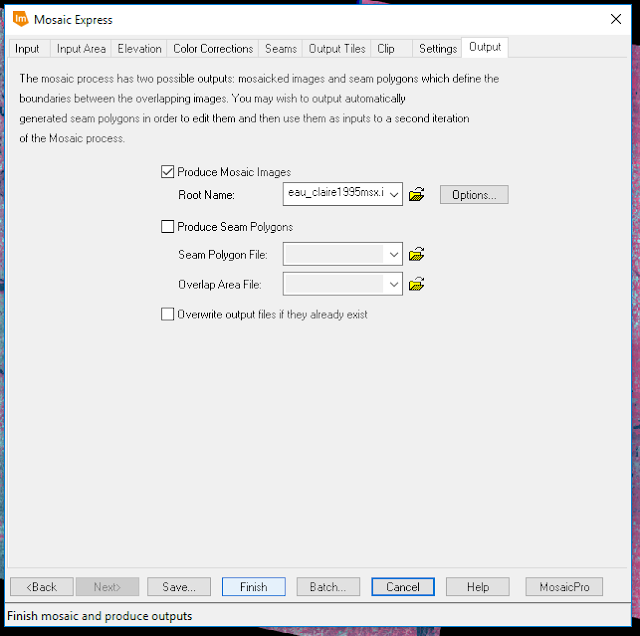

| Figure 19: Finishing Mosaic Express. |

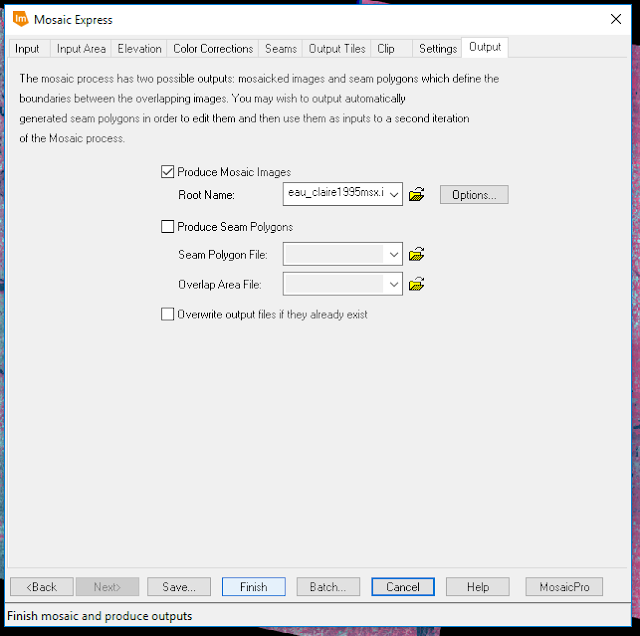

The rest of the parameters in the window were accepted and a name for the output file was created on the last page of the Mosaic Express window (figure 19). The result is shown below:

|

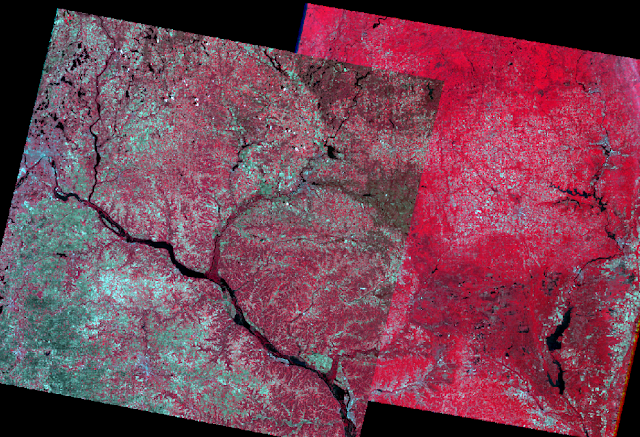

| Figure 20: Mosaic Express output. |

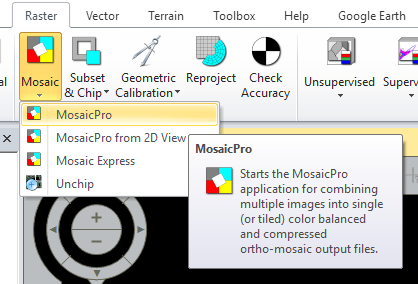

Section 2 - MosaicPro

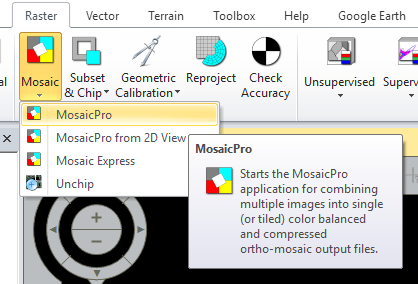

For this section of part 6, the same start-up conventions were used only this time,

MosaicPro was used instead of Mosaic Express (see figure 21).

|

| Figure 21: MosaicPro tool. |

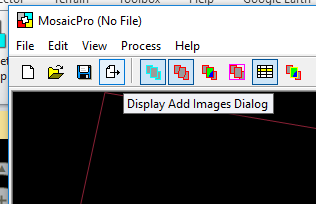

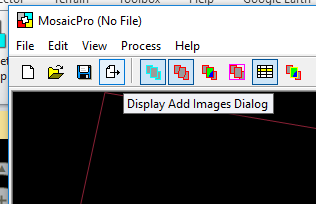

Once the application was opened, the images were added (figure 22). The

Image Area Options tab was selected and the

Compute Active Area button clicked. This same method was used for the second image and two image outlines were displayed in the viewer.

|

| Figure 22: Display Add Images Dialog button (highlighted blue page button with arrow). |

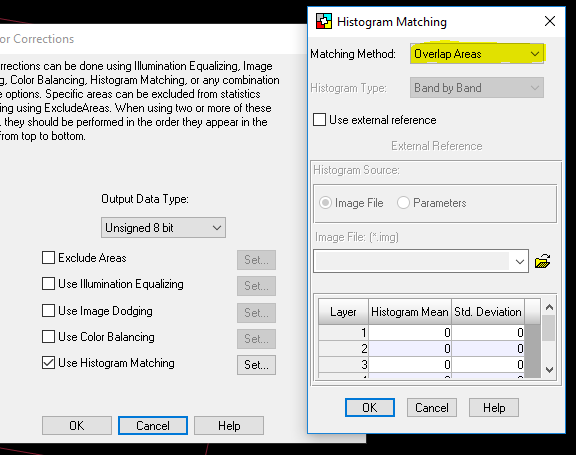

Next, the

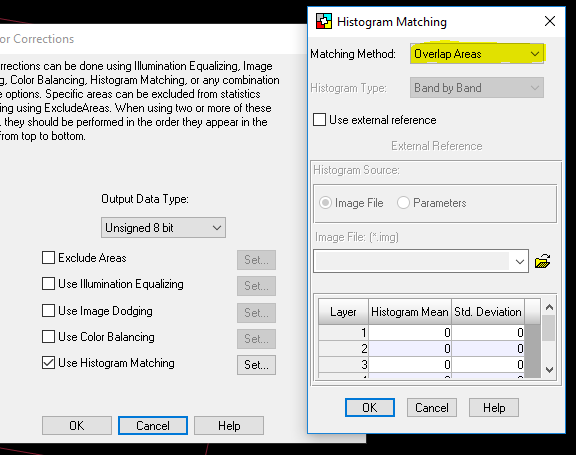

Color Corrections icon in the application banner was clicked and

Histogram Matching option was used.

|

| Figure 23: Color corrections window (icon displayed as highlighted on banner). |

Once the

Use Histogram Matching option was checked, the

Set... button became available (figure 23). The histogram matching parameters window opened and the

Matching Method was set to

Overlap Areas (figure 24).

|

| Figure 24: Set Histogram matching method. |

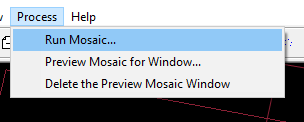

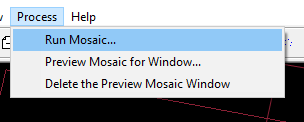

After setting all of the parameters, the

Run Mosaic button was clicked (figure 25) and the resulting mosaicked image was generated (figure 26).

|

| Figure 25: Run MosaicPro tool. |

|

| Figure 26: Results of MosaicPro. |

Part 7: Binary Change Detection - Image Differencing

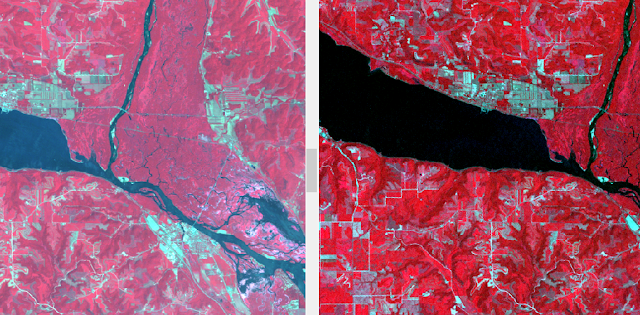

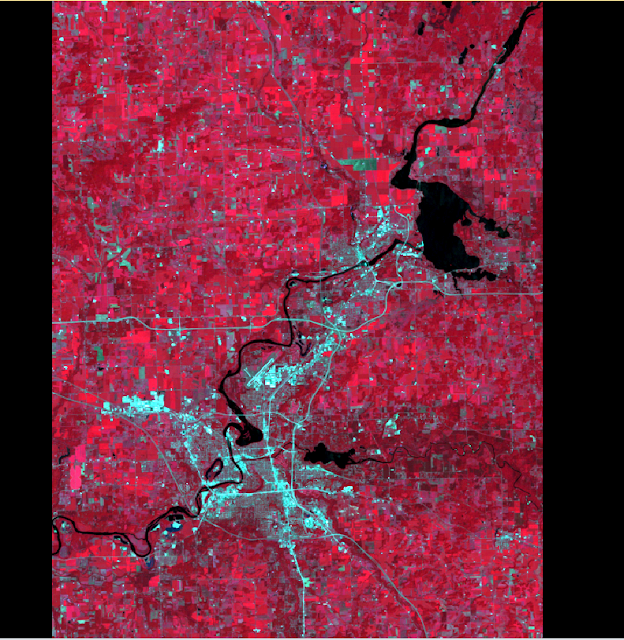

Binary change detection a.k.a. image differencing, refers to the comparison of two images when attempting to map change of an area. For this part of the lab, the goal was to create a differenece image and map the binary results.

Section 1 - Create a difference image

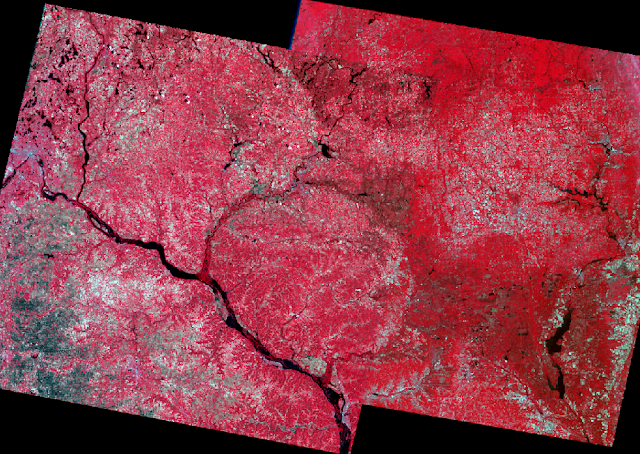

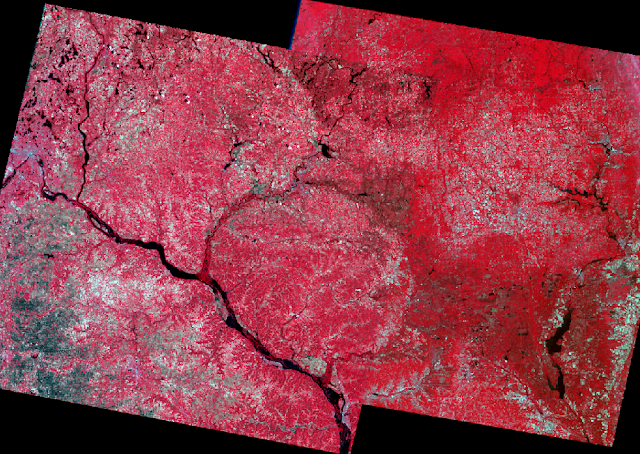

First, two images were brought into separate ERDAS viewers. One was an image of the Eau Claire area taken in 1991 and the other was the same area, but was taken in 2011- a 20-year difference between the two images. At a quick glance, there weren't many noticeable areas of change. When image differencing is used, however, the changes become very noticeable as the tool creates a binary change output (either any given area changed or didn't).

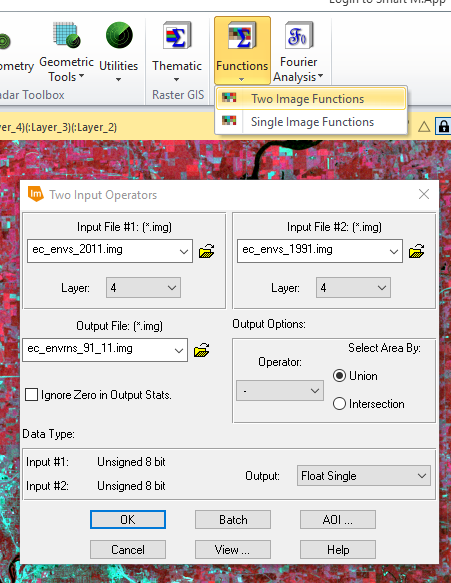

From the Raster banner tab in ERDAS, Functions > Two Image Functions was selected and a Two Input Operators pop-up window opened (figure 27).

|

| Figure 27: Two Image Functions - Two Input Operators. |

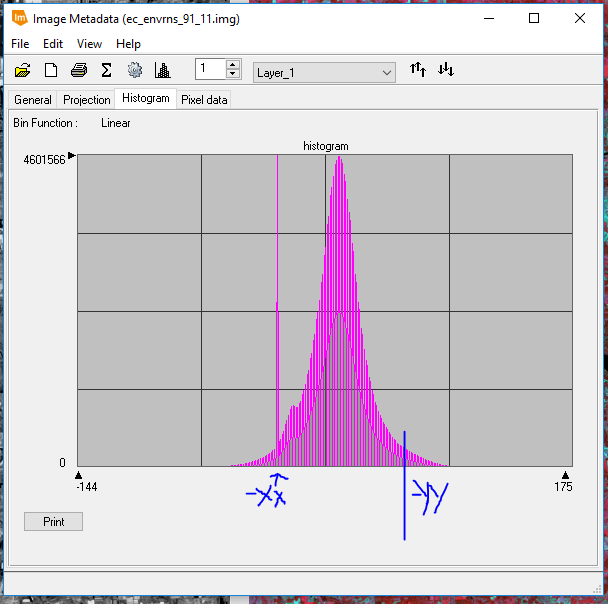

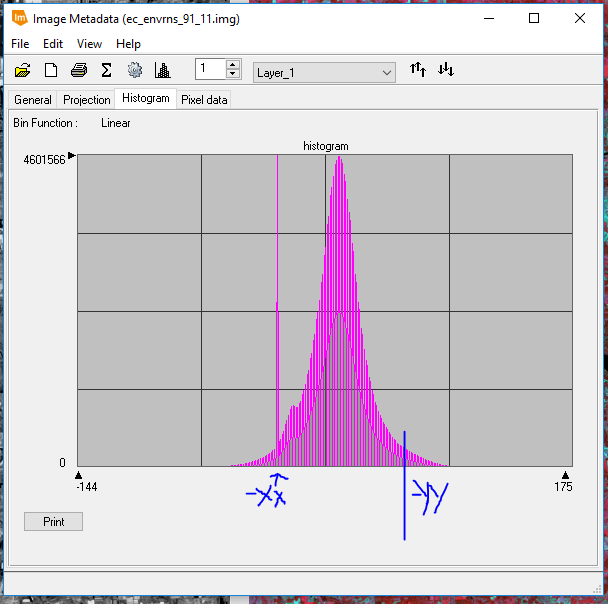

The 2011 image was selected as Input File #1 and the 1991 image as Input File #2. Each of these images used layer 4 as their input layer. The output file was given a name and, under the Output Options section, the Operator was set to - and the Select Area By: Union option was chosen. The tool was run creating the output histogram shown in figure 28.

|

| Figure 28: Change vs no change threshold (anything outside the blue lines is classified as having changed). |

Section 2 - Mapping change pixels in difference image using Modeler

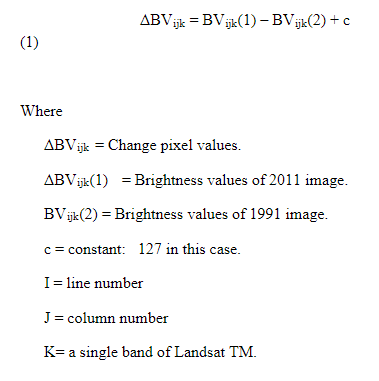

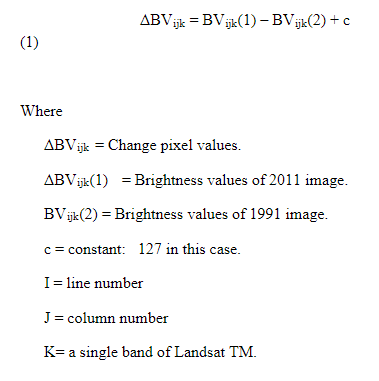

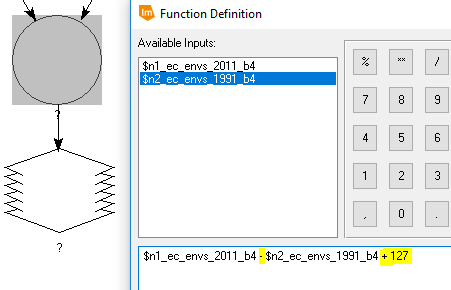

Once the difference image was created, it was time to create a model to rid negative difference values and create an image to display change pixels. The equation for this initiative is shown in figure 29.

|

| Figure 29: Change pixel equation. |

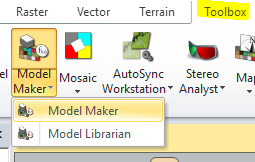

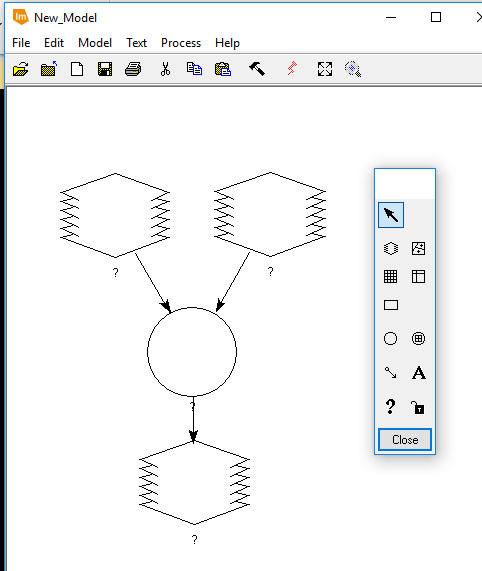

To utilize this equation, the banner tab

Toolbox > Model Maker > Model Maker button was selected, opening a blank model (figures 30 and 31).

|

| Figure 30: Open Model Maker. |

|

| Figure 31: New model with input and output rasters (multiple layers shape), and operation controls (circle). |

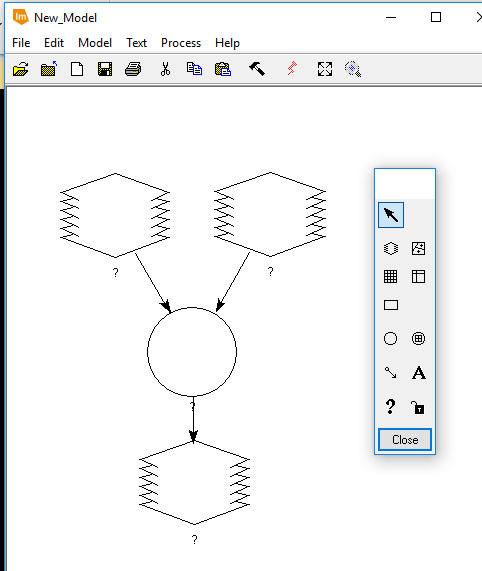

For one image, the 2011 near infrared (NIR) band image was used. The other, used the 1991 NIR band image. The function object (circle shown in figure 31) subtracted the 2011 image from the 1991 image and added the constant of

127 (see figure 32).

|

| Figure 32: Function object operation. |

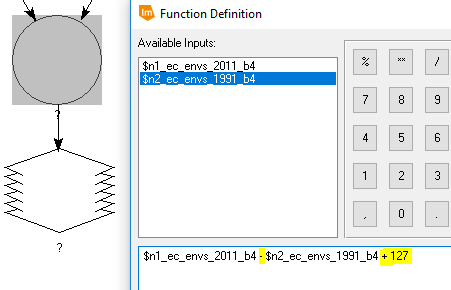

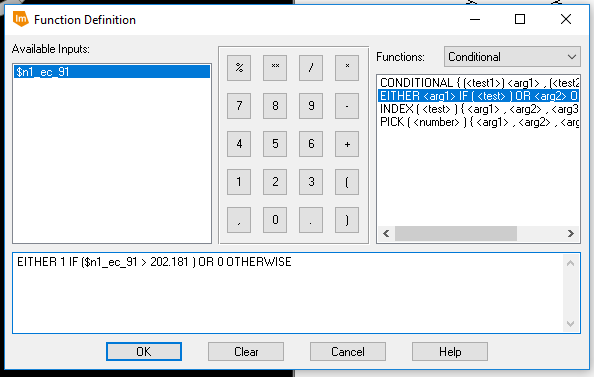

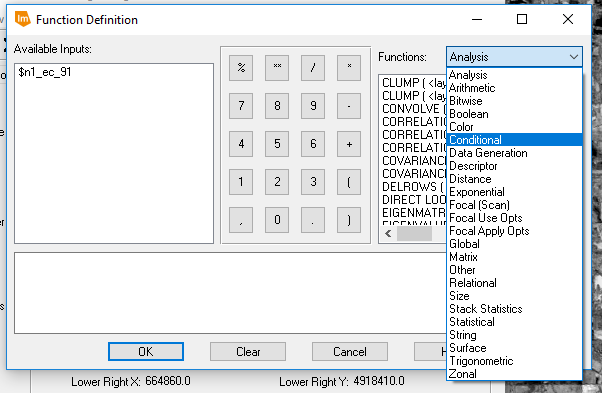

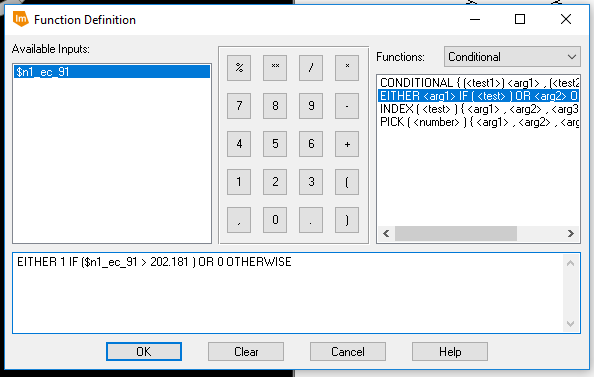

The output raster was given a name and the model was ran (click the red lightning bolt shown in figure 31). The resulting image's metadata was used for the next part of this section. A new model was created in the same way as the previous, however this time, there was only one input raster (the one just created), one function object operator, and one output raster. The function object operator was double-clicked and the function definition window popped up, under

Functions: Conditional was chosen (figure 33). The function object operation for this model is shown in figure 34.

|

| Figure 33: Choose conditional functions. |

|

| Figure 34: Defining binary difference function. |

The

202.181 shown in figure 34's function was found by taking the

mean value from the difference image's histogram information and adding

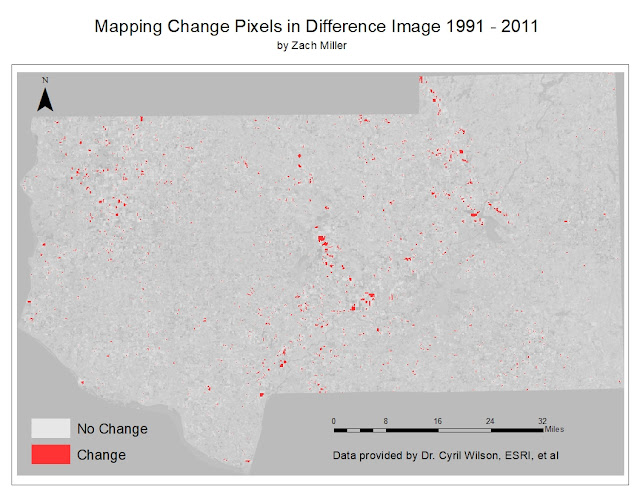

3 times (x) standard deviation taken from the difference image. The model was ran and produced the following image (figure 35).

|

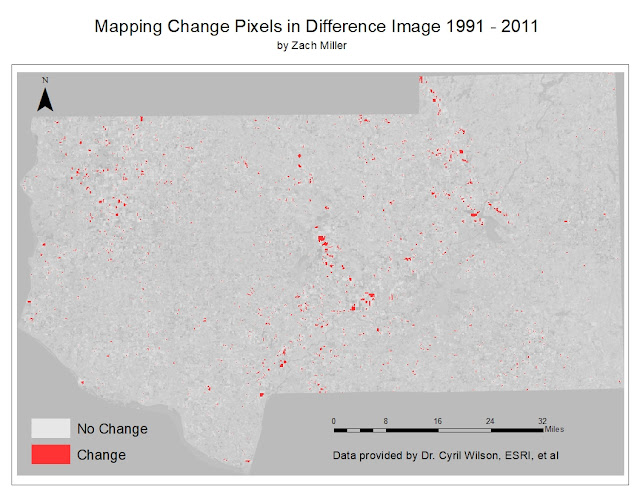

| Figure 35: Binary difference image result. |

This image wasn't very easy to work with and/or analyze, so the image was brought into ArcMap to be symbolized a bit better. The result is displayed below (figure 36).

|

| Figure 36: Binary change map. |

Results/Discussion

Part 1 - Section 1: I thought the Inquire Box was a good tool to define an area of interest when there isn't a need to digitize the exact AOI and/or there isn't a shapefile of the AOI readily available. For quick analysis of a general area, this is a good method.

Part 1 - Section 2: I thought the AOI was a good tool to not only create a subset, but actually define the AOI when a shapefile was accessible. Overall, either the Inquire Box or AOI could be used to create a good subset.

Part 2: I found the pan-sharpening a bit confusing as I didn't really see much of a difference in the results from the original image. I also expected the output to be a sharpened image of the multispectral image not the panchromatic image.

Part 3: I think the haze reduction function could be really beneficial in image interpretation/analysis. Because color variance is so important in these two areas, this function does a good job of correcting hazy imagery and defining more colors.

Part 4: Linking ERDAS Imagine viewer and Google Earth is a great tool to use for image classification. By having access to a 3-D and ground level viewer within the same interface could streamline the image classification process, not to mention the views can be synchronized!

Part 5: I didn't quite grasp the utility of this function. I understand the difference between Nearest Neighbor and Bilinear Interpolation methods, however the difference between the two results wasn't very noticeable to me. I'm sure that there are good uses for either resampling method, I just couldn't distinguish them.

Part 6 - Section 1: The Mosaic Express tool was terrible, as shown in figure 20, the tool hardly mosaicks the images together and quite honestly defeats the purpose of mosaicking.

Part 6 - Section 2: The MosaicPro tool proved to be a much better way to mosaic images. As shown in figure 26, the color correction between the images is much better and the border, while still visible, is far closer to being seamless than the result of Mosaic Express.

Part 7 - Section 1: This section was a bit confusing to me, because the output difference image didn't look too different than either of the input images. When we used the resulting histogram for the mean and standard deviation values, I understood the purpose of this step.

Part 7 - Section 2: I found this tool to be very interesting and useful. Having the binary results can greatly help as a reference for reclassification.

No comments:

Post a Comment