Introduction

The purpose of this lab was to work with and understand geometric correction of rasters. To do this, ERDAS Imagine was used in accordance with a dataset containing multispectral and USGS images and references of Chicago and Sierra Leone.

Methods

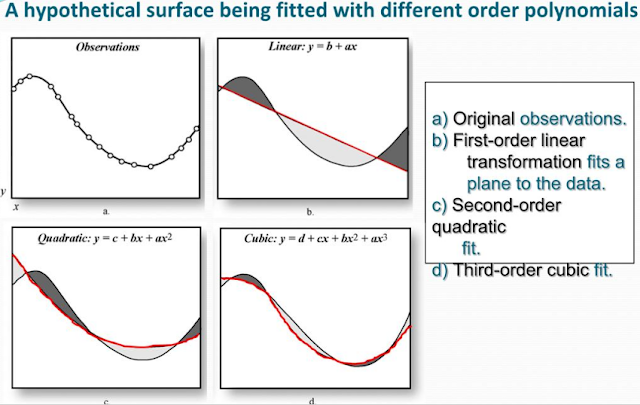

In order to achieve the initiatives outlined in the previous section, it was important to understand how

geometric correction worked before preforming this action on imagery. In the first part of this lab, a multispectral image of the Chicago area was corrected using a USGS rendering as a reference. Only three

ground control points (GCPs) were required since a

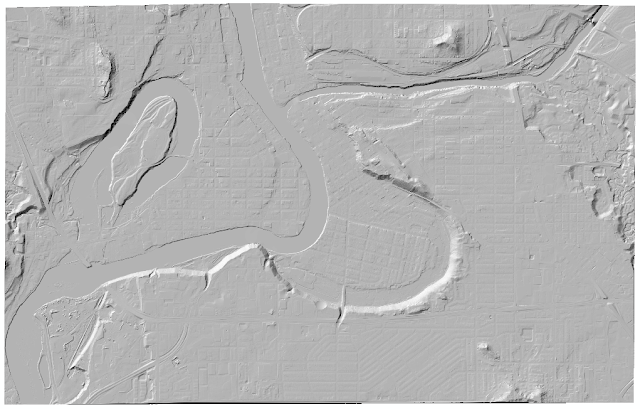

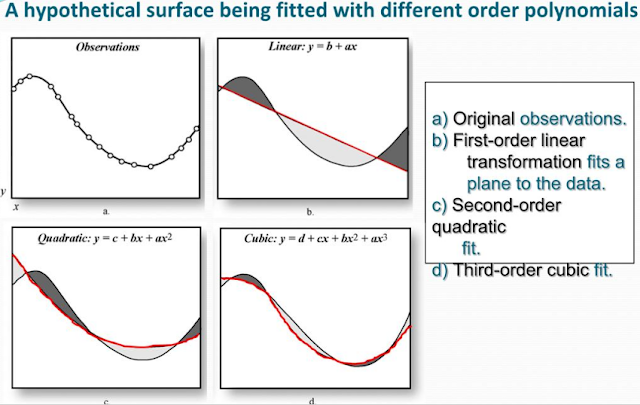

first-order transformation (figure 1) was used.

|

| Figure 1: Differences of ordered polynomial transformations. |

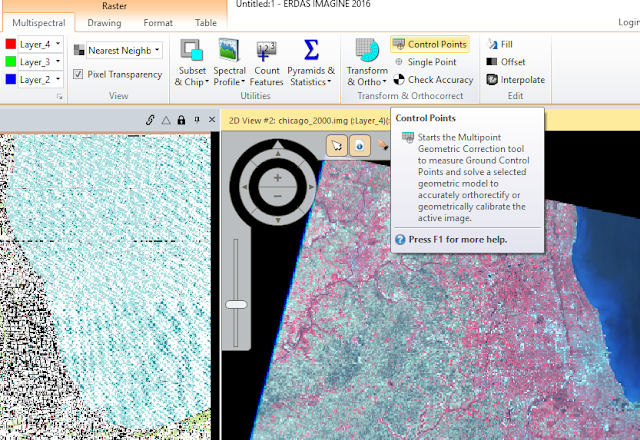

The order of transformation directly relates to the amount of GCPs required for correction, as the averaging becomes more complex and accurate. Using the

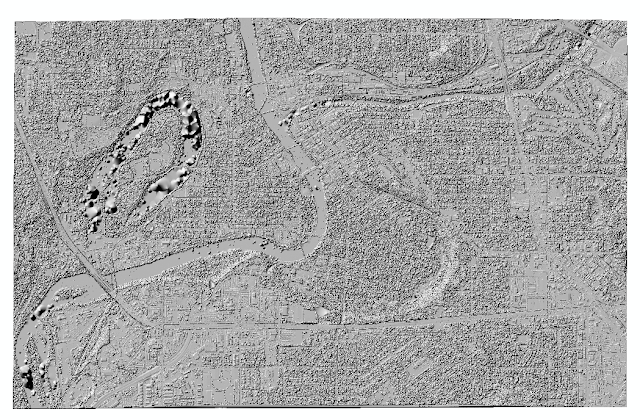

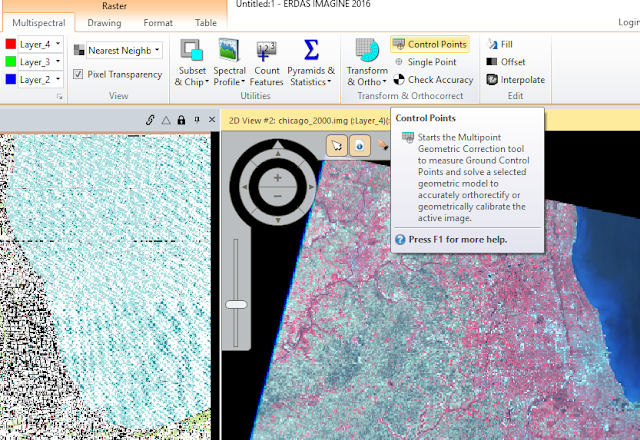

Multipoint Geometric Correction tool (figure 2) a dialog window opened allowing the geometric process to commence.

|

| Figure 2: Add control points tool. |

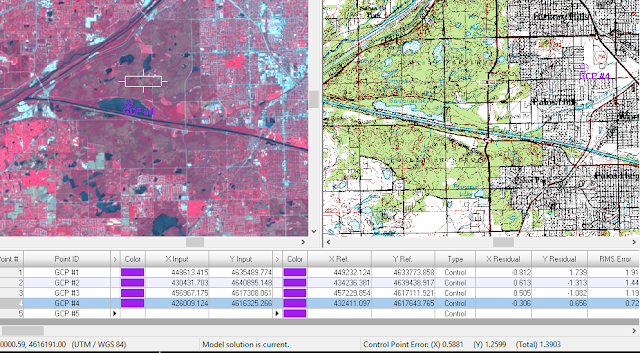

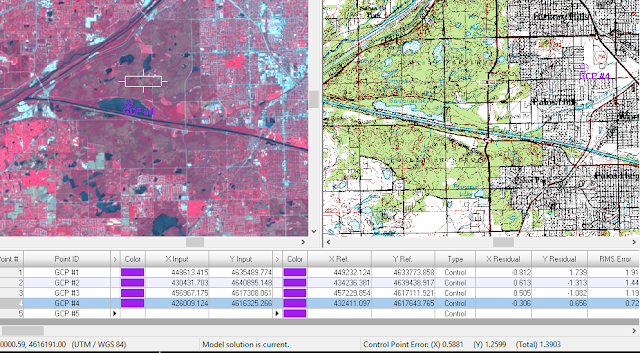

When the dialog window opened, a

Polynomial Geometric Model was set to ensure 3 GCPs were used in first-order transformation. Then, by clicking the

Create GCP button in the dialog window (figure 3), a point was selected in both the multispectral image and the reference map (figure 4).

|

| Figure 3: Create GCP button in Geometric Correction dialog window. |

|

| Figure 4: GCP #4 shown in both maps. |

As seen in figure 4, when initially locating the points, they were a bit different between the two images. This was corrected by moving the points around either window until a

Control Point Error (bottom right of figure 4) of > 0.5 pixel was achieved. Once the images had accurate GCPs, the geometric correction was performed by creating an output file for the image, setting the resampling method to

Nearest Neighbor, and prompting the dialog window to run the calculations based on the GCPs placed in the images (figure 5).

|

| Figure 5: Complete geometric correction. |

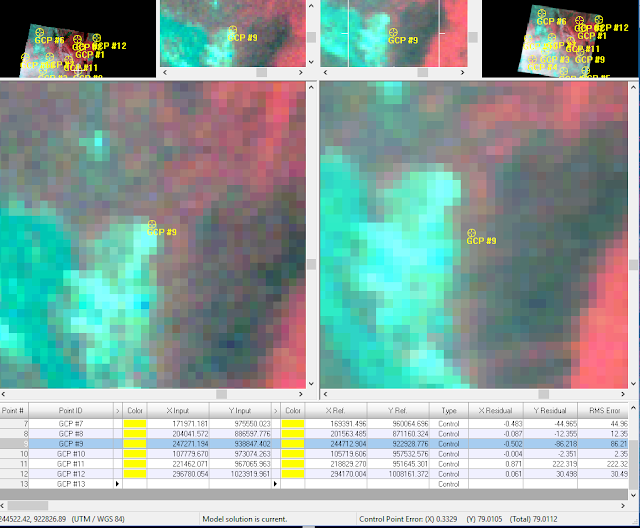

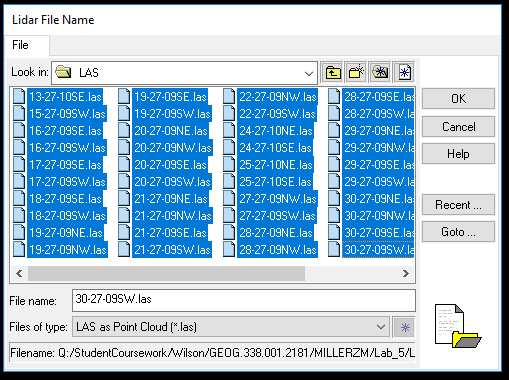

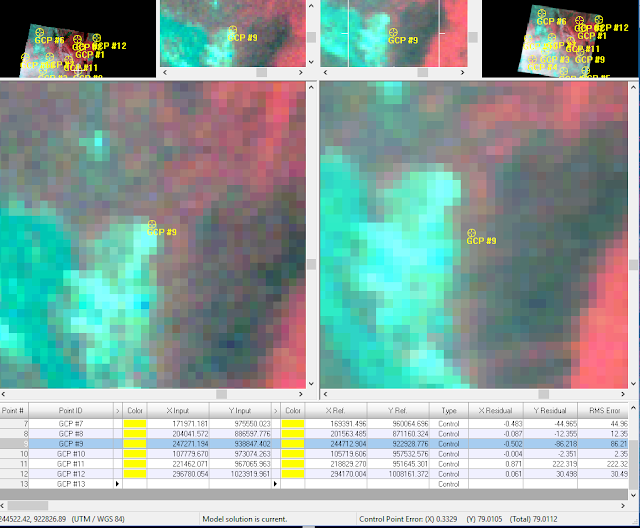

In the second part of this lab, geometric correction was performed on a different set of images, this time, two multispectral images of an area in Sierra Leone. One image is quite distorted while the other is geometrically correct, this will serve as a reference for the first image. Following a similar procedure as the previous part, the warped image viewer was selected and the

Multipoint Geometric Correction tool was used to add a minimum of nine GCPs to the images. The number of GCPs required for this part was due to the use of a third-ordered transformation.

|

| Figure 6: Adding GCPs to Sierra Leone images. |

When calculating the geometric correction for these images,

Bilinear Interpolation was used instead of Nearest Neighbor. Due to the large amount of GCPs used and a desire to preserve as much shape of the reference image as possible, this method was chosen this time around.

Results

|

| Figure 7: Geometrically corrected multispectral image (Part 1). |

|

| Figure 8: Original multispectral image (Part 1). |

|

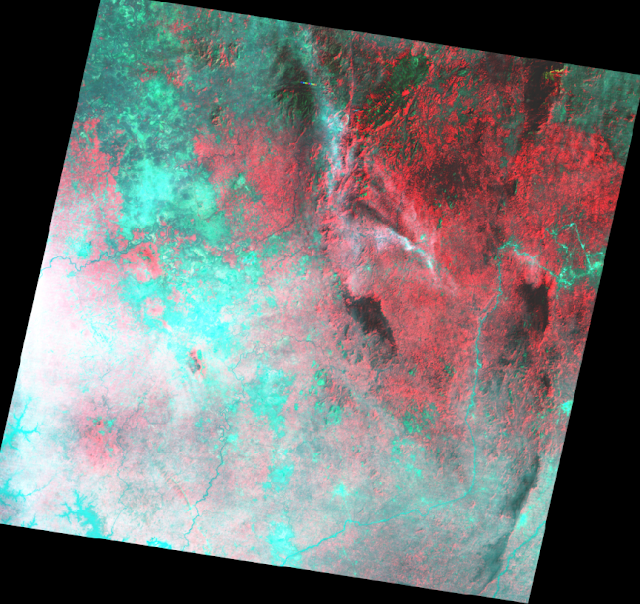

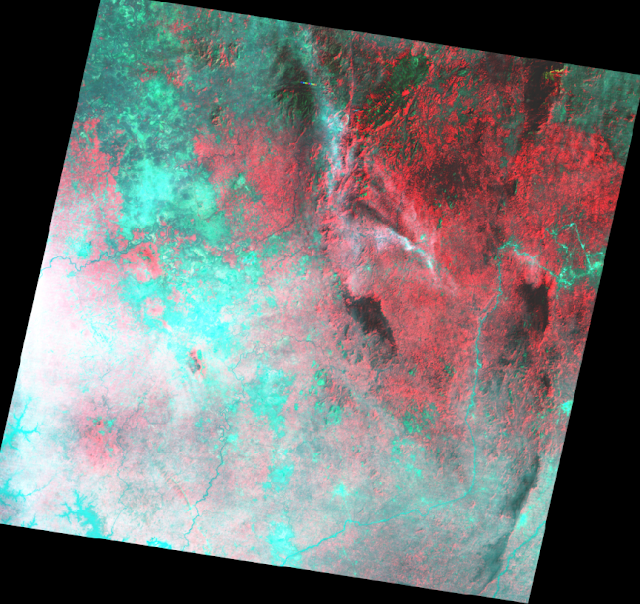

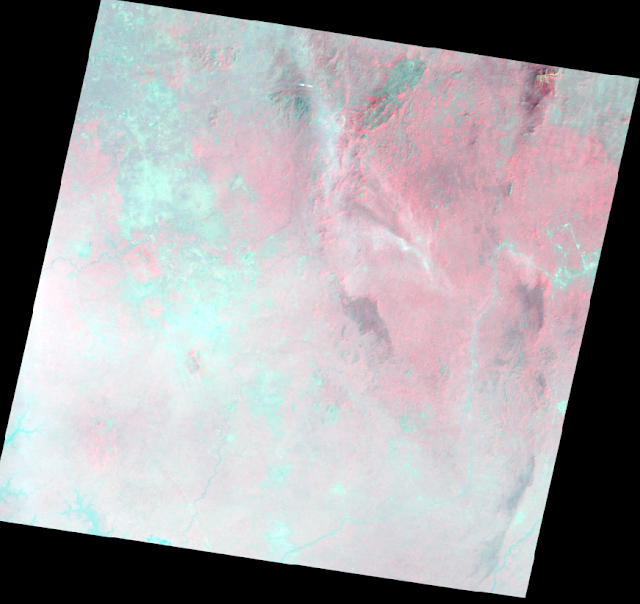

| Figure 9: Original multispectral image (Part 2). |

|

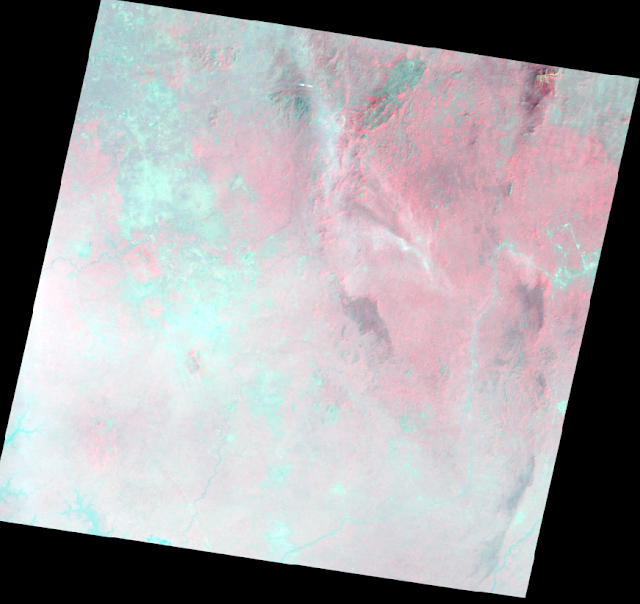

| Figure 10: Geometrically corrected multispectral image (Part 2). |

Conclusion

Looking at the geometrically corrected images in the results section (figures 8 and 10 respectively), the transformations look vastly different. In the corrected Chicago image (figure 8), the changes are slight. It appears as though the image is almost pushed further away from the viewer, more of Lake Michigan and the southwestern corner of Illinois. The corrected image also appears to pixelate sooner than the original image, so the correction appears to preserve shape while distorting spatial resolution.

As for the second geometrically corrected image (figure 10), similar results to the first part are generated. The image appears further away and the color brilliance/ spatial resolution appears diminished. The size and shapes of features in the corrected image are incredibly close to those of the reference image, however. Perhaps in a professional setting, the two images could be used in accordance with each other to analyze features.

Overall, the geometric correction of multispectral imagery can provide a much more spatially accurate image for remote sensing applications. The process of geometric correction was fairly easy to complete in ERDAS Imagine as the prompts essentially only required input images, GCPs, and a resampling method to correct imagery. The image with more GCPs appeared to be more geometrically accurate than the first corrected image which only used 4 GCPs.